What makes a good

Business DSL

Domain-oriented abstractions are only the beginning

As I have described in a previous

post, I am convinced that, for true business agility, the domain experts in

an organisation have to contribute directly to the development of software.

Having them dump requirements into unstructured/informal documents, often Word,

and then making developers understand every detail and implement them

correctly, is way to slow and error prone. The solution I am advocating (and I

will provide proof that this actually works in future posts), is to create DSLs

for use by domain experts that allow them to directly model/specify their

contribution to the system. So what makes a good business DSL?

Abstractions aligned with the Domain

The absolut minimum is to

make sure the abstractions your DSL provides are aligned with the domain. This

aspect is fundamental; it is at the core of the definition of a DSL. While it

is not always easy to find out what these abstractions are, the point that they

need to be aligned with the domain doesn’t need any more repeating, so I’ll

leave it at that.

The importance of Notation

Users of a language aren’t

necessarily conscious of the abstractions that underlie the language, at least

not initially, when they decide whether they “like” the language. However, what

they always notice is the notation; this is what they primarily interact with.

So getting the notation(s) right is absolutely crucial for getting a language

adopted.

Two styles of notations are

commonly used in software engineering: textual, which we all know from programming,

and graphical, which we know from “modelling tools”. Within those two

notations, the degrees of freedom are limited in practice. In the text world,

you can adapt the keywords and the ordering of words. In the graphical world,

which, in practice, often means box-and-line diagrams, you can vary the shape

of boxes, the decorations of lines, and of course color and stuff.

But in many business domains,

there is way more notational variability. Tables play a huge role, not just for

data collection, but also for expressing multi-criteria decisions. Mathematical

symbols are very powerful and can make a huge difference in how complex

calculations appear. Prose-style text, where you use (fragments of) natural

language sentences, makes learning a language much easier for many

non-programmers. And of course, many domains benefit from mixing some of those:

using math in text, embedding prose in diagrams, embedding text in tables. You

might even want to provide several notations for the same abstractions where one

is easier to learn and the other one is more productive (because it scales

better in terms of model size or complexity).

So, as part of your domain

analysis, make sure you explore different notation options, select a tool that

can support all of them, and look out for existing notations in the domain

which you can adopt.

A great IDE

The next ingredient for any

language, business DSL or not, is a great IDE. These days, people just don’t

care about your language if it doesn’t have a good IDE. A good IDE supports

code completion and error reporting, but also more advanced features such as

test execution, refactorings and debugging. You expect this from your Java (or

whatever) IDE, and your DSL users expect that, too.

One of the major

contributions of language workbenches, compared to previous language tooling,

is that, as part of developing a language, you more or less automatically also

get an IDE. Language development implies IDE development.

I will discuss error

reporting, debugging and (test) execution below, so let me briefly expand on

refactoring here. Refactoring is defined as changing the structure of a

program, while retaining its semantics. Usually you perform a refactoring in

order to improve some quality attribute, typically modularity, extensibility or

understandability. Refactorings are often motivated by the need to evolve the

software in the sense that you have to realise additional requirements or

define a variant of the program (suggesting to “factor out” the commonalities).

Quite obviously, this also applies to models created with DSLs: insurance contracts,

medical algorithms or communication protocol definitions evolve too, often

quite frequently and significantly. So the IDE must support refactorings that

are aligned with the those kinds of changes that occur regularly. And by the

way: your language must support the corresponding abstractions, but that’s for

another post.

There is also an interaction

between IDE support and the syntax. Remember SQL? In SQL, you write

SELECT <fields> FROM <table> WHERE ...

This is problematic

int terms of IDE support, because, when you enter the fields, you have not yet

specified from which table; code completion for those fields (based on the

table) is not possible. This is the reason why more modern query languages have

reversed the syntax:

from <table> SELECT <fields> WHERE ...

Keep this in mind when you

design a language.

Analyses and Error Reporting

A very much under-appreciated

criterion for a good DSL, its alignment with the domain and its acceptance by

users is the quality of error messages (this is also true for programming

languages, there is interesting research on this). This is all the more true the more

expressive or sophisticated (trying to avoid the negatively connotated word

“complexity”) your language is.

We all know the situation

where some not-so-computer-savvy person complains that something “doesn’t work”

and asks us for help. We then read the error message carefully, and

figure out immediately what needs to be done. We also know the situation where,

after reading error message, we are none the wiser and still have no clue how

to fix it. It’s clear that in the latter case, we have to improve the message.

But what can we learn from the former? Maybe that users have given up on

reading the message, because error messages usually suck.

What can we do? Well, one

thing is to just treat error messages as a core part of the user experience.

Design them carefully! Maybe even engage in some low-key usability engineering

and ask users whether they are helpful. There is also a tooling issue: often an

error message can only be attached to oneprogram element even

though the error is about the relation of severalprogram elements.

So the ability to attach an error to multiple locations (or clickably refer to

multiple elements from the message) helps as well.

However, the elephant in the

room is the precision of the underlying analyses: you can only report problems

that are discovered and pinpointed precisely by the underlying analyses. More

precise analyses, in turn, imply more implementation effort and more runtime

cost. In general, for a precise analysis and a good error message, you have to

recover (some aspects of) the domain semantics from its encoding in the

program.

When you design a DSL, you

can influence both aspects. First, ideally design your DSL in a way that

prevents (some) errors from being made, or engineer your IDE to prevent them

automatically. XML is an interesting example here: your IDE can automatically

ensure that every opening tag has a corresponding closing tag. However, remember

that XML documents are trees; the need for closing tags is an artifact of the

concrete syntax. Maybe you can change the language to directly use a tree

syntax that avoids the need for closing tags in the first place. This is

essentially what JSON does.

However, as the

expressiveness of your DSL grows, the degree of freedom allowed by the DSL will

be big enough for your users to shoot themselves into their foot in non-trivial

ways. In this case, design the language in way that makes the analyses simpler.

The good thing is that a language design that simplifies analyses is also a

language design that is generally more closely aligned with the domain. For

example, detecting a dead state in a state machine is much easier if it is

encoded as a first-class state machine as opposed to when it is encoded as a

switch statement in C. The synergies between analysis and language design are

numerous; I will discuss this more in a later post, but you can also check out

this booklet.

Visualisation and Reporting

Error messages are usually

local, associated with one (or maybe a few) program elements. However, some

problems relate to the overall structure of the program. This leads to two

problems. First, finding these problems can be very expensive computationally;

it might be infeasible to perform the analysis in realtime, as the user edits

the program. Global (qualified) name uniqueness is the obvious example. Second,

they might be based on heuristics, i.e., there might not be a clear distinction

between right and wrong, but you can identify a spectrum of “badness”.

The same solution solves both

problems: reporting (we treat a visualisation essentially as a report that uses

a graphical syntax). A report is run on demand; it is ok for it to take a

little while. It analyses (usually global) properties of a model and then shows

the results in a way that lets the user detect patterns or trends.

For example, the sizes or

color of bubbles in a visualisation can represent a characteristic that might

be problematic (e.g., the LOC in a function). In a textual report, you can sort

the result entries according to some metric; for example, a profiler can show

the slowest parts of the program at the top of the list. Or you can highlight

the differences between subsequent runs of the report to make users aware of

what changed (maybe for the worse).

When you design the feedback

system for a language, it is useful to explicitly distinguish between (more or

less local) error reporting on the one hand, and (typically global and

expensive) reporting and visualisation. Running the latter on the CI server, in

regular intervals, is a good idea.

Testing DSL models

Test-driven, or at least

test-supported development has taken hold in the developer community. And for

good reason: tests assure that your code works correctly, ensure that it does

the correct thing, and provides a safety-net during evolution and refactoring.

As a consequence, testing frameworks and tools, integrated with the IDE, are

ubiquitous. So you want that for DSLs, too! Your telco pricing expert also

wants to make sure that he doesn’t lose the company money because his pricing

algorithm is buggy!

However, for testing to be

feasible, the abstractions and notations used for testing must be aligned with

the core of your DSL. For example, to test an old age insurance policy, users

should be able to describe the employment history of a customer, using terms

relevant to the domain, and then run what is essentially an integration test on

this customer: what will his monthly pension be once he retires, and how will

it change over time?

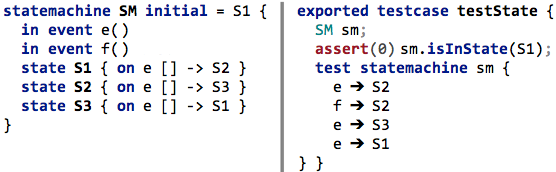

A simple state machine DSL (left)

and a DSL for testing whether the transitions lead to the expected target

states (right).

The degree to which you rely

on (the equivalents of) units tests vs. integration tests depends on the domain

and needs to be figured out during domain analysis. In any case, make sure you

consider testing, and testability, of your DSL programs an inherent part of

language design.

To increase the acceptance of

testing, make sure that running tests is painless for DSL users. In particular,

if the execution of your DSL relies on code generation and the subsequent

compilation, packaging and deployment chain, the turnaround time for tests

might become too long. Make sure you provide a way for executing the tests with

essentially zero overhead. One way of achieving this is to also provide an

in-IDE interpreter, i.e., a way of executing programs without any code

generation. Since interpreters usually do not have to reproduce non-functional

concerns faithfully (i.e., they can be slow :-)), implementing one for your

language isn’t that much effort, especially when using suitable frameworks.

Simulation and Debugging

The interpreter has an

additional benefit: it also lets you define a simulator, with which users can

“play” with the models. In my experience, this ability is often perceived as

the main benefit of a DSL and the required formalisation of a domain in the

first place.

A simulator is closely

related to a debugger for integration scenarios. Both rely on an interpreter

(or an instrumented runtime). The difference is that a debugger illustrates,

explains (and allows to control) a previously written program. A simulator lets

users interactively play with the program, enter/change data or trigger events.

The difference can be blurry, but it becomes clearer when behavior changes over

time and state change becomes a factor.

Building debuggers can be a

lot of work, partially because language workbenches don’t support this to the

degree they support other aspects of language development. Hopefully this changes

in the future. However, an interpreter framework that is designed to be

“debuggable” can make a big difference. More on simulation and debugging in a

future post.

Wrap up

Nothing new here for the

software engineer, I guess. All of the things I am suggesting here are more or

less similar to what one does in programming languages. My goal with this post

was to point out why and how these things are relevant in DSLs (maybe

especially so, as in error messages) and how the ability to design your own

language may simplify the process. Let me know what you think!